The Quest for the Perfect Search Engine Speculations by Stefan Stenudd

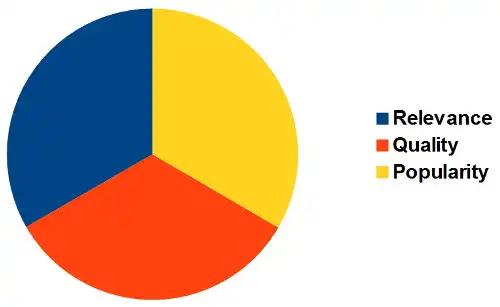

What's happened to Google is easy enough to compute: In their effort to fight unwanted Search Engine Optimization (SEO), they've changed their algorithm without properly considering the effect for the user. Google may have gotten rid of sites cynically constructed for the sole purpose of stealing top positions on the search results, but by doing so they also swept away links of high value to the users. Their result lists got a conformity that is quite disappointing, since it leads to a boring surfer experience. They seem to have failed to consider a very simple mathematical fact: when one criteria is reduced, the others swell. Making one piece of the cake smaller also leads to the others getting bigger. Fewer criteria decide the result. As far as I can see, there are three major criteria of importance in getting search results satisfying to the user: relevance, quality, and popularity. Google also rewards websites for their size, and very much so. This is very strange because it has almost no relevance to the user. It may simply be a priority inspired by Google's own size. As the saying goes: Birds of a feather flock together. For the user, size is meaningless compared to relevance, quality, and popularity. Relevance describes to what extent a website is about the subject of the search. A search engine algorithm decides this by evaluating search word frequency and prominence in URL, titles, texts, and so on. Quality is the measure of how well the search subject may be treated. Of course, this is not easy to compute. What search engines evaluate are the links to the webpage in question how many they are and the quality of the websites they come from. The latter easily becomes sort of a vicious circle, when websites thereby increase the estimated quality of one another in sort of a loop. Another way to go about it is to measure links from such types of websites as those with the edu suffix, newspapers, online encyclopedias, forums on the search word topic, et cetera. That, too, is uncertain and not necessarily relevant to the user, but what to do? Popularity is the nirvana of our time, a more or less outspoken belief in things being better the more people favor them. That can indeed be questioned, although it has some relevance to the user. When we make our searches, we like to see what's the talk of the town regarding that topic. We want to see the most popular webpages about the topic in the listing, although we might not always feel the need to click them. Popularity can be decided by the number of visits to the sites. What the search engines also read is again the number of links to the site, like they do in the case of quality. That's probably why Google already at its outset decided to put such emphasis on mapping links. They are relevant to two of the three criteria. But they are no guarantee for a pleased user experience, simply because behind most searches is a need for specific information, which is best measured by the relevance criteria. Unfortunately, it's also the criteria most exposed to malicious SEO often to the point of the relevance being a chimera. Now, if the three criteria are valued equally by a search engine algorithm (which is hardly the case with any search engine), it looks like this:

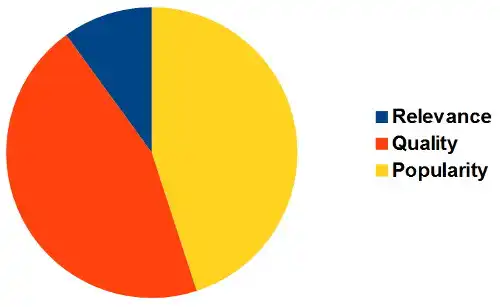

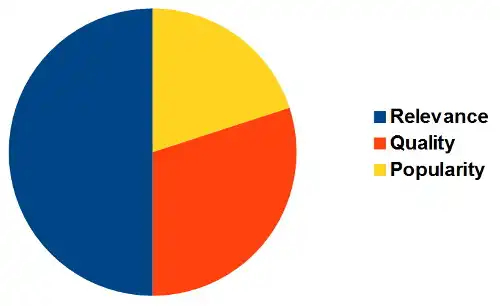

That's what Google did, when changing their algorithm in their aggressive battle against malicious SEO uses. The purpose might have been commendable, but the result may even be the opposite of the intent, since fewer criteria make methodical SEO easier. So, what would be the ideal weight distribution of the three criteria in the eyes of the user? No doubt, relevance is of primary important to any search engine user. We want information on the topic indicated by the search words we use. Any search engine unable to give us that in the results would be useless. Next comes quality. We prefer search results filtering out nonsense and misleading sites that use search words to catch visitors but don't deliver the information requested. If this is not done to some extent, we would just drown in an ocean of spam, whatever we seek. Or porn. Lastly, popularity has some bearing, depending on what we search for. Man is a social being, needing to be aware of what goes on in the minds of fellow men. That's why we give significant value to popularity. In a search on a topic, we would also like to have indications of how other people relate to the topic and what they are likely to find out about it. In a diagram like the ones above, a user friendly division of the three criteria would be something like: relevance 50%, quality 30%, and popularity 20%.

Also, Google has become extremely depreciative of the most important relevance ingredient: the actual search words. Therefore, sites that have very little to do with the topic can get surprisingly high ranks, even when they are obviously all about something else. Actually, I even see what seems to be a depreciation of the old Google credo: the links to the sites as a measure of their quality. Many top results lack significant numbers of links, even less if they are to be examined with some quality filter. Instead, Google focuses more and more on popularity. The same old same old megasites appear again and again, whatever the topic and however substantially or superficially they treat it. So, size and popularity those are the new icons cherished at Google. Not very exciting. Since the biggest sites often have the most visitors and vice versa, it leads to a terrible conformity in the Google search results. I think it can lead to Google's downfall, if they don't change this radically. Users get bored with this easily recognizable repetition in the searches, and start longing for alternatives. And they do already exist. The closest one in the race is Bing (also the engine for Yahoo), which is very interesting to compare to Google. Especially with search words about which there is great competition to be on top, it's my experience that Bing gives more varied and interesting results than Google does. Often strikingly so. If my impression is correct, it can quickly lead to an escalating number of users shifting their habits. And then they are not very prone to shift back. We may be reluctant to change our habits, but even more so to change them back. The past is not to be revisited other than by memory. If that's not where Google is satisfied to reside, it should rethink its quest.

Stefan Stenudd February 16, 2013

More Speculations

About CookiesMy Other WebsitesCREATION MYTHSMyths in general and myths of creation in particular.

TAOISMThe wisdom of Taoism and the Tao Te Ching, its ancient source.

LIFE ENERGYAn encyclopedia of life energy concepts around the world.

QI ENERGY EXERCISESQi (also spelled chi or ki) explained, with exercises to increase it.

I CHINGThe ancient Chinese system of divination and free online reading.

TAROTTarot card meanings in divination and a free online spread.

ASTROLOGYThe complete horoscope chart and how to read it.

MY AMAZON PAGE

MY YOUTUBE AIKIDO

MY YOUTUBE ART

MY FACEBOOK

MY INSTAGRAM

STENUDD PÅ SVENSKA

|

Sunday Brunch with the World Maker

Sunday Brunch with the World Maker